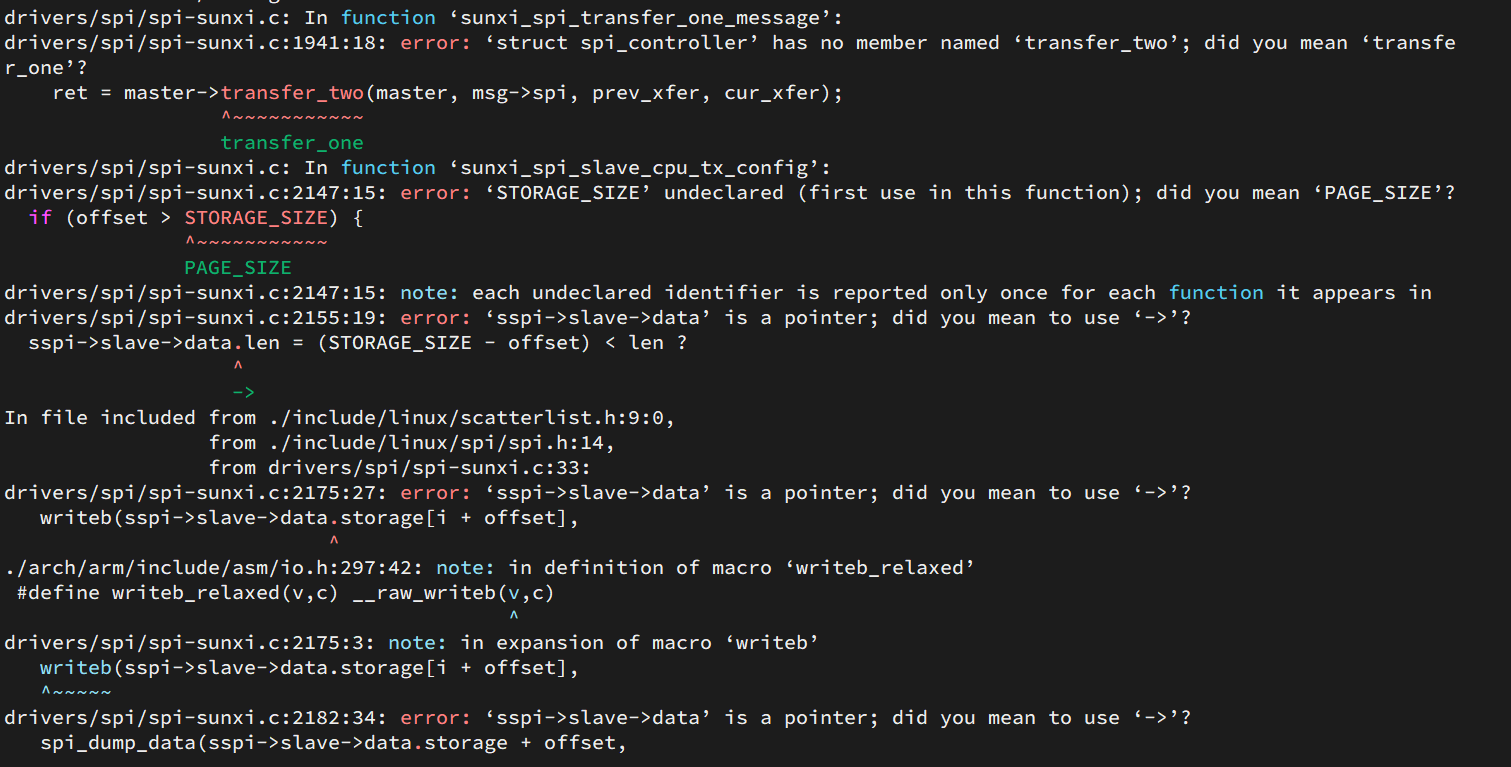

t113 spi原厂驱动有问题

-

采用 https://github.com/YuzukiHD/Buildroot-YuzukiSBC 这位大佬的buildroot

使用spidev驱动直接卡死。 -

spi我也遇到过卡死的问题,不是这个大佬的代码,是代理给的代码。resetfifo后,没等reset结束,直接读数据,就会卡死。我把它改成resetfifo后,判断一下reset完成再进行下一步就不会卡死了。

-

@qinlinbin 大佬 有具体的代码吗

-

@yy_fly 仅供参考!!!spi-sunxi.c文件。

/* reset fifo */ static void spi_reset_fifo(void __iomem *base_addr) { u32 reg_val = readl(base_addr + SPI_FIFO_CTL_REG); u32 poll_time = 0x7ffffff; reg_val |= (SPI_FIFO_CTL_RX_RST|SPI_FIFO_CTL_TX_RST); /* Set the trigger level of RxFIFO/TxFIFO. */ reg_val &= ~(SPI_FIFO_CTL_RX_LEVEL|SPI_FIFO_CTL_TX_LEVEL); reg_val |= (0x20<<16) | 0x20; writel(reg_val, base_addr + SPI_FIFO_CTL_REG); /*添加的内容*/ reg_val = 0; reg_val = readl(base_addr + SPI_FIFO_CTL_REG); while((reg_val & SPI_FIFO_CTL_RX_RST || reg_val & SPI_FIFO_CTL_TX_RST) && --poll_time) reg_val = readl(base_addr + SPI_FIFO_CTL_REG); } static int sunxi_spi_cpu_readl(struct spi_device *spi, struct spi_transfer *t) { struct sunxi_spi *sspi = spi_master_get_devdata(spi->master); void __iomem *base_addr = sspi->base_addr; unsigned rx_len = t->len; /* number of bytes sent */ unsigned char *rx_buf = (unsigned char *)t->rx_buf; unsigned int poll_time = 0x7ffffff; unsigned int i, j; u8 buf[64], cnt = 0; while (rx_len && (--poll_time > 0)) { /* rxFIFO counter */ if (spi_query_rxfifo(base_addr)) { *rx_buf++ = readb(base_addr + SPI_RXDATA_REG); --rx_len; } } /*余下的没改*/ } -

@qinlinbin 谢谢大佬,改完这个 不卡死了。但是 我用spidev_test接受全是0。我已经短接了mosi miso

-

@yy_fly

这个问题需要你自己研究一下了

这个问题需要你自己研究一下了 -

@qinlinbin

好的 谢谢大佬

好的 谢谢大佬 -

试试这个